Why would a Russian oil company be targeting American voters?

Cambridge Analytica➡️BCCI 2.0

Cambridge Analytica and Online Manipulation

It's not just about data protection; it's about strategies designed to induce addictive behavior, and thus to manipulate

Marcello Ienca, Effy VayenaMarch 30, 2018

The Cambridge Analytica scandal is more than a “breach,” as Facebook executives have defined it. It exemplifies the possibility of using online data to algorithmically predict and influence human behavior in a manner that circumvents users’ awareness of such influence. Using an intermediary app, Cambridge Analytica was able to harvest large data volumes—over 50 million raw profiles—and use big data analytics to create psychographic profiles in order to subsequently target users with customized digital ads and other manipulative information. According to some observers, this massive data analytics tactic might have been used to purposively swing election campaigns around the world. The reports are still incomplete and more is likely to come to light in the next days.

Although different in scale and scope, this scandal is not entirely new. In 2014, Facebook conducted a colossal online psychosocial experiment with researchers at Cornell University on almost seven hundred thousand unaware users, algorithmically modifying their newsfeeds to observe changes in their emotions. The study results, published in the prestigious Proceedings of the National Academy of Sciences (PNAS), showed the ability of the social network to make people happier or sadder on a massive scale and without their awareness—a phenomenon that was labeled “emotional contagion.” As in the Cambridge Analytica case, Facebook’s emotional contagion study sparked harsh criticism, with experts calling for new standards of oversight and accountability for social-computing research.

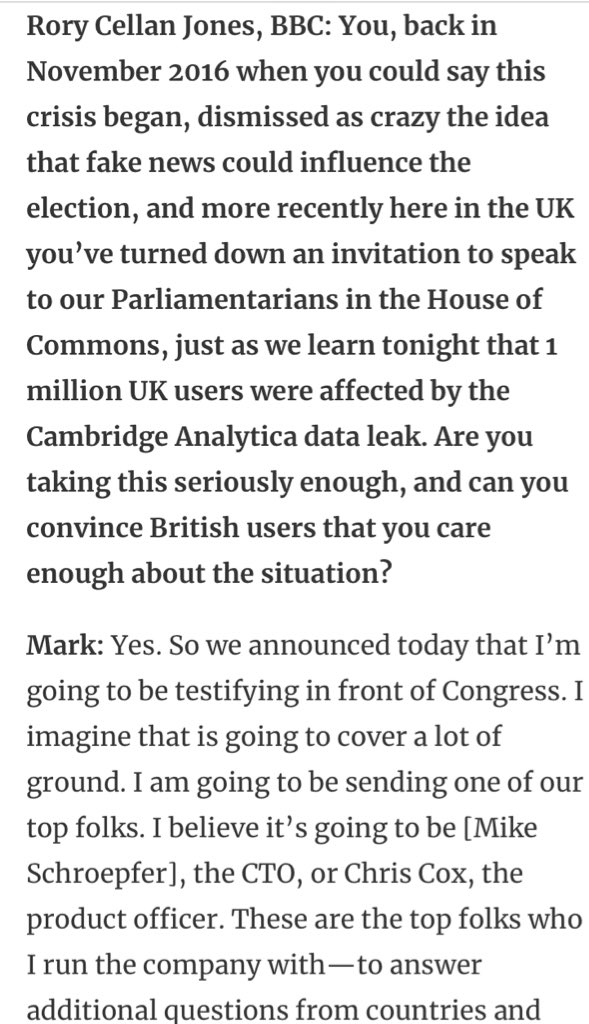

A common lesson from these two different cases is that Facebook’s privacy policy is no absolute guarantee of data protection: in 2014, it allowed the reuse of data for research purposes even though “research” was not listed in the company’s Data Use Policy at the time of data collection. A couple of years later, it allowed an abusive app to collect data not only on users who signed up for it, but also on their friends. Mark Zuckerberg himself has admitted that the data were not protected as they should have been. In trying to make sense of this scandal, however, there are two more subtle considerations that go beyond data protection:

Advertisement

First, accepting terms of service (ToS) and privacy policies (PP) is a prerequisite for using most online services, Facebook included. Nonetheless, it is no secret that most people accept ToSs without even scrolling to the end of the page. This well-known phenomenon raises the question of whether online agreements qualify as informed consent. Berggruen Prize winner Onora O’Neill has argued that “the point of consent procedures is to limit deception and coercion,” hence they should be designed to give people “control over the amount of information they receive and opportunity to rescind consent already given.”

Online services, from the most mainstream like Facebook and Twitter to the most shady like Cambridge Analytica, seem to do exactly the opposite. As a German Court has recently ruled, there is no guarantee that people are sufficiently informed about Facebook’s privacy-related options before registering for the service, hence informed consent might be undermined. On top of that, the platform hosts activities that use online manipulation to reduce people’s rational control over the information they generate or receive, be it in the form of micro-targeted advertising or fake-news-spreading social bots. Instead of being limited, deception is normalized.

In this ever-evolving online environment characterized by weakened consent, conventional data protection measures might be insufficient. What data are used for is unlikely to be controlled by the users who provided the data in the first place. Data access boards, monitoring boards and other mechanisms have a better chance to control and respond to undesirable uses. Such mechanisms have to be part of a more systemic oversight plan that spreads throughout the continuum of regulatory activities and responds to unexpected events across the life cycle of data uses. This approach can target new types of risk and emerging forms of vulnerability arising in the online data ecosystem.

The second consideration is that not just Cambridge Analytica, but most of the current online ecosystem, is an arm’s race to the unconscious mind: notifications, microtargeted ads, autoplay plugins, are all strategies designed to induce addictive behavior, hence to manipulate. Researchers have called for adaptive regulatory frameworks that can limit information extraction from and modulation of someone’s mind using experimental neurotechnologies. Social computing shows that you don’t necessarily have to read people’s brains to influence their choices. It is sufficient to collect and mine the data they regularly—and often unwittingly—share online.

Therefore we need to consider whether we should set for the digital space a firm threshold for cognitive liberty. Cognitive liberty highlights the freedom to control one’s own cognitive dimension (including preferences, choices and beliefs) and to be protected from manipulative strategies that are designed to bypass one’s cognitive defenses. This is precisely what Cambridge Analytica’s attempted to do, as their managing director revealed during an undercover investigation by Channel 4 News: the company’s aim, he admitted, is to “to take information onboard effectively” using “two fundamental human drivers,” namely “hopes and fears,” which are often “unspoken or even unconscious.”

Attempts to manipulate other people’s unconscious mind and associated behavior are as old as human history. In Ancient Greece, Plato warned against demagogues: political leaders who build consensus by appealing to popular desires and prejudices instead of rational deliberation. However, the only tool demagogues ancient Athens could use to bypass rational deliberation was the art of persuasion.

In today’s digital ecosystem, wannabe demagogues can use big data analytics to uncover cognitive vulnerabilities from large user datasets and effectively exploit them in a manner that bypasses individual rational control. For example, machine learning can be used to identify deep-rooted fears among pre-profiled user groups which social-media bots can subsequently exploit to foment anger and intolerance.

The recently adopted EU General Data Protection Regulation, with its principle of purpose limitation (data collectors are required to specify the purpose of collecting personal information at the time of collection) is likely to partly defuse the current toxic digital environment. However, determining where persuasion ends and manipulation begins, is a question which goes, as recently admitted by the European Data Protection Supervisor (EDPS), “well beyond the right to data protection.”

The EDPS has underscored that microtargeting and other online strategies “point towards a culture of manipulation in the online environment” in which “most individuals are unaware of how they are being used.” If recklessly applied to the electoral domain, they could even change reduce “the space for debate and interchange of ideas,” a risk which “urgently requires a democratic debate on the use and exploitation of data for political campaign and decision-making.” Last year, international experts have addressed the question of whether democracy will survive big data and artificial intelligence. The answer will partly depend on how we govern data flows and protect the liberty of the individual mind.

https://blogs.scientificamerican.com/ob ... ipulation/

Larisa Alexandrovna is describing this as BCCI 2.0

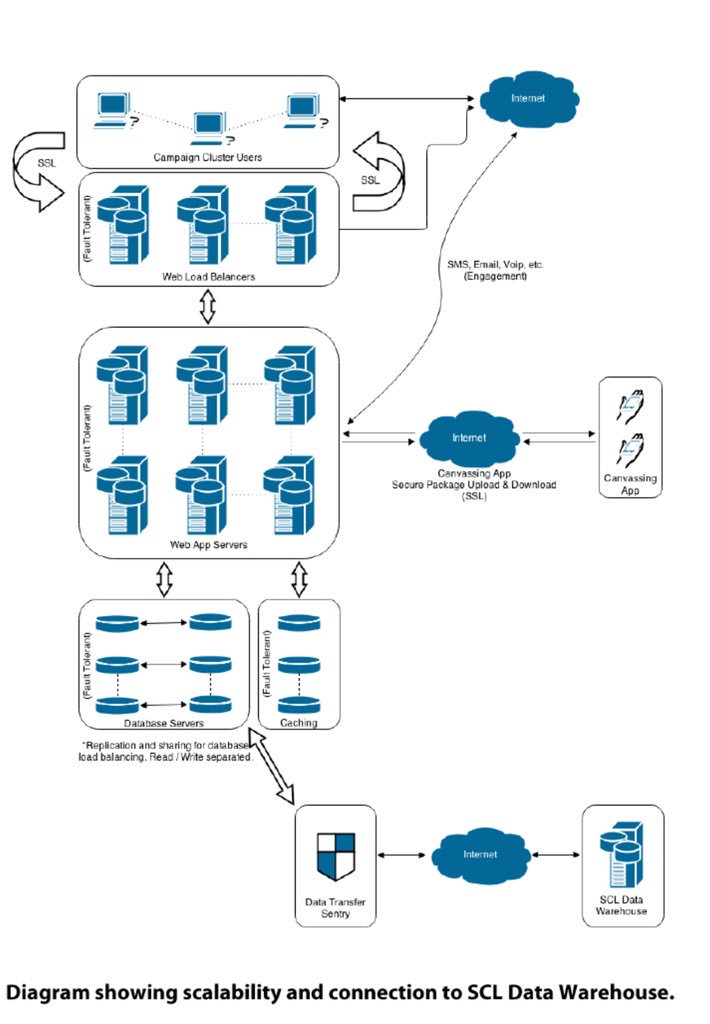

"Cambridge Analytica is a shell corp majority owned by Robert Mercer created to hide foreign actors and optics to obscure SCL Group, a military contractor with a tainted past. He came forward because military grade psychological operations have no place in democracies" h/t to Prof David Carroll (who is suing CA on behalf of the US public).

Cambridge Analytica Affiliate Gave John Bolton Facebook Data, Documents Indicate

by Jeremy Kahn

March 29, 2018, 10:06 AM CDT

Parliament releases trove of whistleblower documents, emails

Evidence bolsters claims U.K. company didn’t destroy data

A British company at the heart of the Facebook Inc. data-privacy scandal agreed to give a political action committee founded by John Bolton, U.S. President Donald Trump’s newly appointed national security adviser, data harvested from millions of Facebook users, documents released by Parliament show.

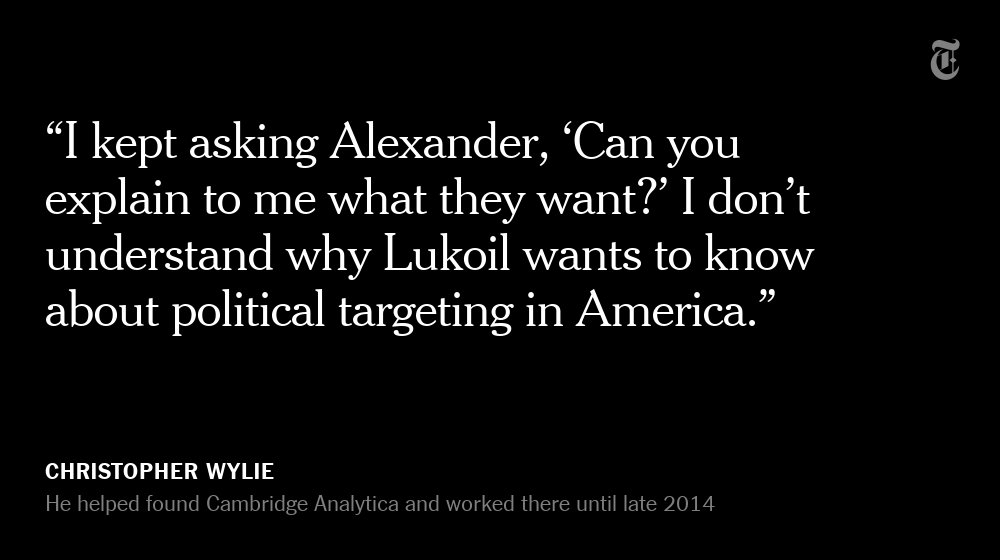

The papers were provided by whistle-blower Christopher Wylie, a former employee of both Cambridge Analytica and its affiliate company SCL Elections, part of London-based SCL Group. The U.K. Committee on Digital, Culture, Media and Sports released the documents Thursday, which include more than 120 pages of business contracts, emails and legal opinions.

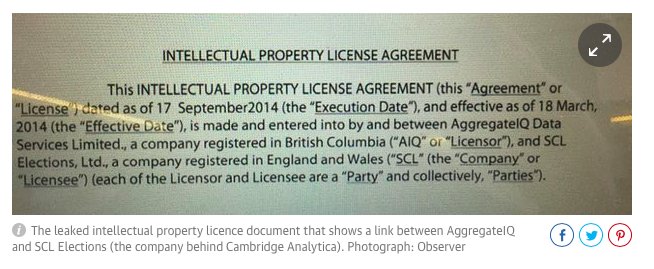

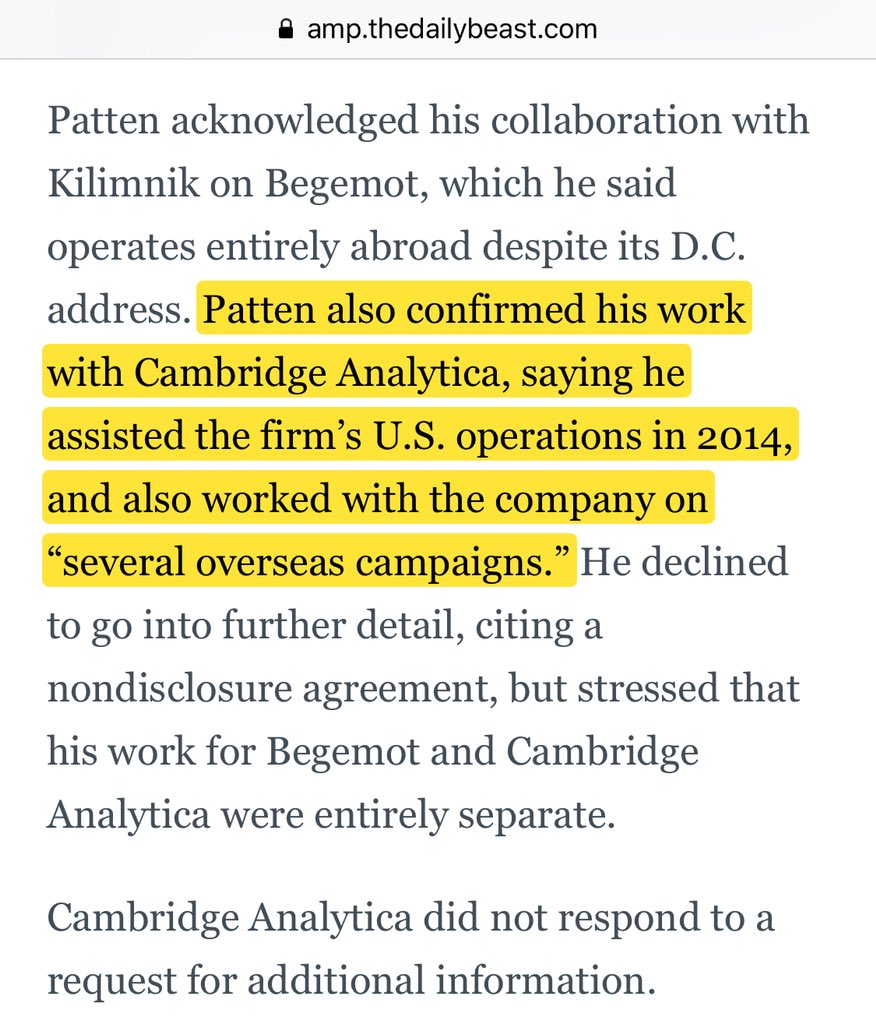

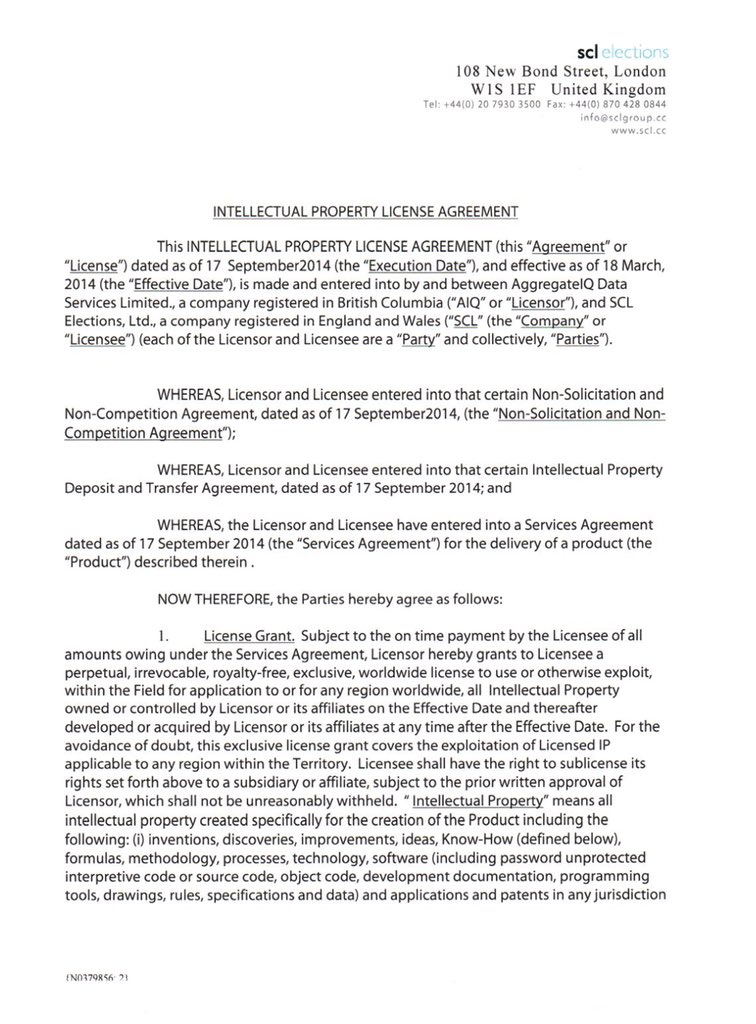

Revealed are indications that Aggregate IQ, a Canadian company that worked closely with both Cambridge Analytica and SCL, had access to data from Aleksandr Kogan, an academic who had set up an app designed to build psychological profiles of people, based in part from Facebook data. The social-media giant has said that Kogan violated its terms of service by using this information for commercial purposes, but Kogan has said he is being "scapegoated" by the companies involved.

Cambridge Analytica said in a statement released Thursday that it didn’t use Facebook data from Kogan’s company in the 2016 U.S. presidential campaign. "We provided polling, data analytics and digital marketing to the campaign," the statement said. The company said it didn’t use personality profiles of the sort Kogan specialized in. And it said the data it did have was used to "identify ’persuadable’ voters, how likely they were to vote, the issues they cared about, and who was most likely to donate."

In a statement Tuesday, following Wylie’s testimony before the parliamentary committee, Cambridge Analytica said it had never provided Aggregate IQ with any data from Kogan’s company, Global Science Research.

Emails released by Parliament show that SCL Group, Cambridge Analytica’s U.K. affiliate, discussed with Aggregate IQ how it could provide Kogan’s data, and models for how to target voters in several U.S. states based on it, to Bolton’s PAC.

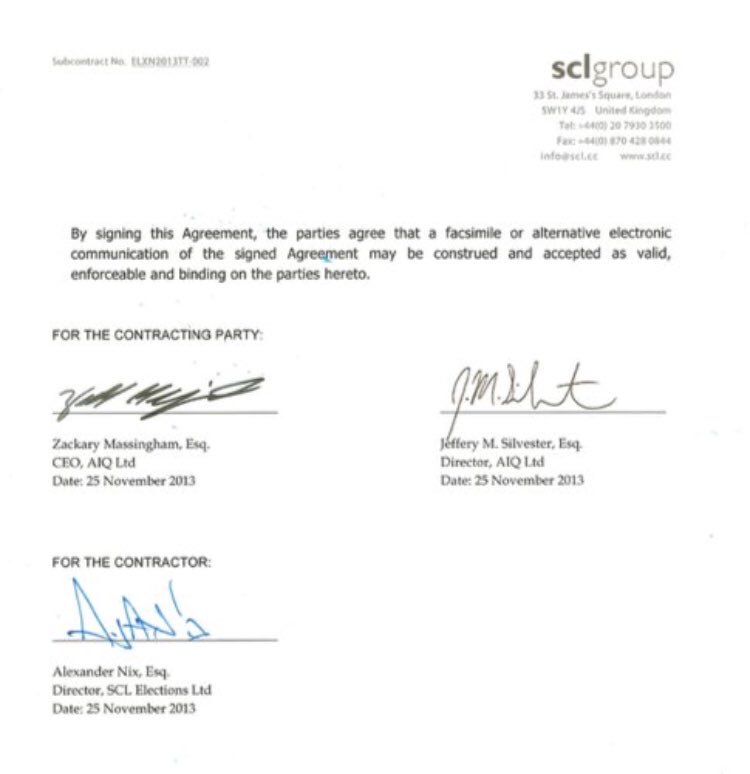

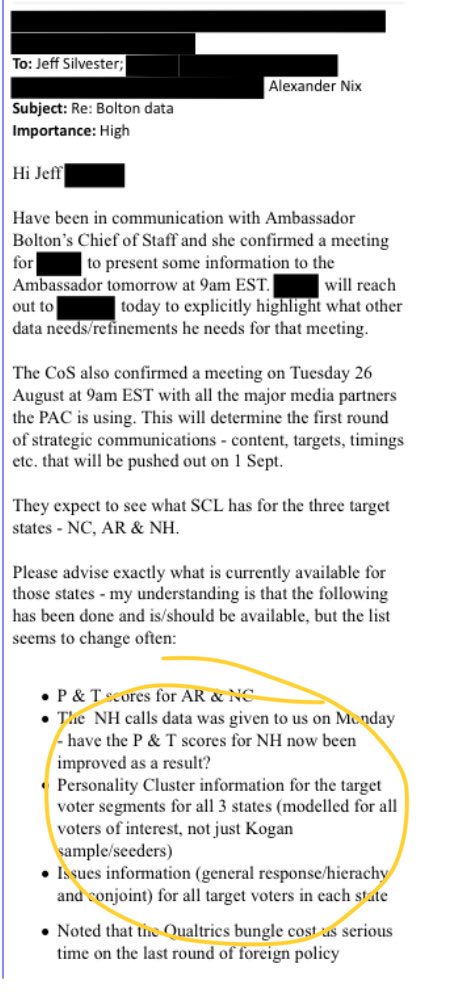

In one email chain that included Alexander Nix, the suspended chief executive officer of Cambridge Analytica, and Jeff Silvester, the co-founder of Aggregate IQ, the two discussed using Kogan’s data to help create "personality cluster information for the target voter segments" in New Hampshire, Arkansas and North Carolina.

The executives also discussed getting Kogan data from another survey firm so that he could combine it with the data he had from Facebook. "This would need to be modeled for target voters by end of next week so it can be used to help micro targeting effort to be pushed out in the following week," an unnamed individual at SCL wrote in the message, which contained numerous redacted passages.

News that Bolton’s political group, The John Bolton Super PAC, was a beneficiary of Cambridge Analytica’s psychometric profiles was first reported by The New York Times last Friday.

The documents released by Parliament also show that SCL Group contracted Aggregate IQ in November 2013, agreeing to pay the company up to $200,000 for services that included "Facebook and social media data harvesting." Another document shows that in 2014, SCL paid Aggregate IQ $500,000 to create a platform it could use to target U.S. voters.

The documents also include a confidential legal memorandum, stated to be prepared for Rebekah Mercer, daughter of Trump supporter Robert Mercer, which warns Cambridge Analytica that it could run afoul of U.S. laws barring foreign nationals from participating in U.S. elections. The memo advises Mercer, former Trump political adviser Stephen Bannon, and Nix that Nix ought to recuse himself from supervising any of Cambridge Analytica’s U.S. election activity and that foreign nationals without green cards should not be involved in "polling and marketing" or providing strategic advice to U.S. campaigns.

The name of the lawyers who prepared the memo are redacted in the version released by Parliament. Wylie has told reporters and testified before Parliament that many of those Cambridge Analytica employed to help on U.S. campaigns were foreign citizens.

A 2012 memo from the U.K. Ministry of Defense released by the committee revealed that the British military’s psychological warfare unit paid SCL to train its staff on how to assess the effectiveness of "psychological operations" and that SCL had helped "support 15 (UK) PsyOps," including operations in Libya and Afghanistan

https://www.bloomberg.com/news/articles ... ata-crisis